“People search for certainty. But there is no certainty.”

“人们追寻确定性,但是确定性并不存在”

—— Richard Phillips Feynman—— 理查德·菲利普斯·费曼

I am an Assistant Researcher in College of Computer Science & Software Engineering at Hohai University. I obtained my Ph.D. degree from Department of Computer Science & Technology in Nanjing University in 2022, where I was very fortunate to be advised by Prof. Zhi-Hua Zhou. Before that, I received my B.Sc. degree from Department of Statistics in University of Science and Technology of China in 2017.

My research interests include artificial intelligence, machine learning, and optimization. I mainly focus on building the theoretical foundation of ensemble learning, and application to solve complex optimization problems in water resources. I have published 18 papers in AI related journals (ISJ, NNJ) and conferences (ICML, NeurIPS, ICLR). I am a recipient of the Hong Kong Scholars Award (2024) and have hosted the National Natural Science Foundation of China for Young Scholars (2023) and the China Postdoctoral Science Foundation Special Founding (2023). Here are my Resume & 中文简历.

🔥 News

- Enrolling Students: Looking for self-motivated M.Sc and Ph.D students to work on Artificial Intelligence.

Feel free to send me an email with your resume and a document stating your research motivation.

👨💻 Students

M.Eng Students

2023: [Tian-Shuang Wu 吴填双] (中科院1区Top期刊 1篇, CCF-C 1篇); [Ning Chen 陈宁] (中科院1区Top期刊 1篇, CCF-C 1篇, AI4Water交叉方向 1篇)

2024: [Jia-Le Xu 许佳乐]

2025: [Yu Huang 黄宇]; [Yu-Nian Wang 王雨年]; [Lin-Kun Cui 崔林坤]

B.Eng Students

2024: [Zhong-Shi Chen 陈仲石] (Admitted to NJU); [Jin-Han Xin 辛金韩] (Admitted to SEU)

2025: [Guang-Hao Ding 丁广浩] (Admitted to NEU); [Xun-Hao Zheng 郑旬澔] (Admitted to HHU); [Ying Zhou 周滢] (Admitted to HHU); [Wen Ye 叶玟] (Admitted to HHU)

2026: [Xiao-Tong Liu 刘晓彤]

2027: [Ling-Feng Wang 汪凌烽]

Collected Seminars

[ML&DM Seminar]: Seminar on Machine Learning and Data Mining for my students.

[FAI Seminar]: International Seminar on Foundational Artificial Intelligence.

📝 Publications

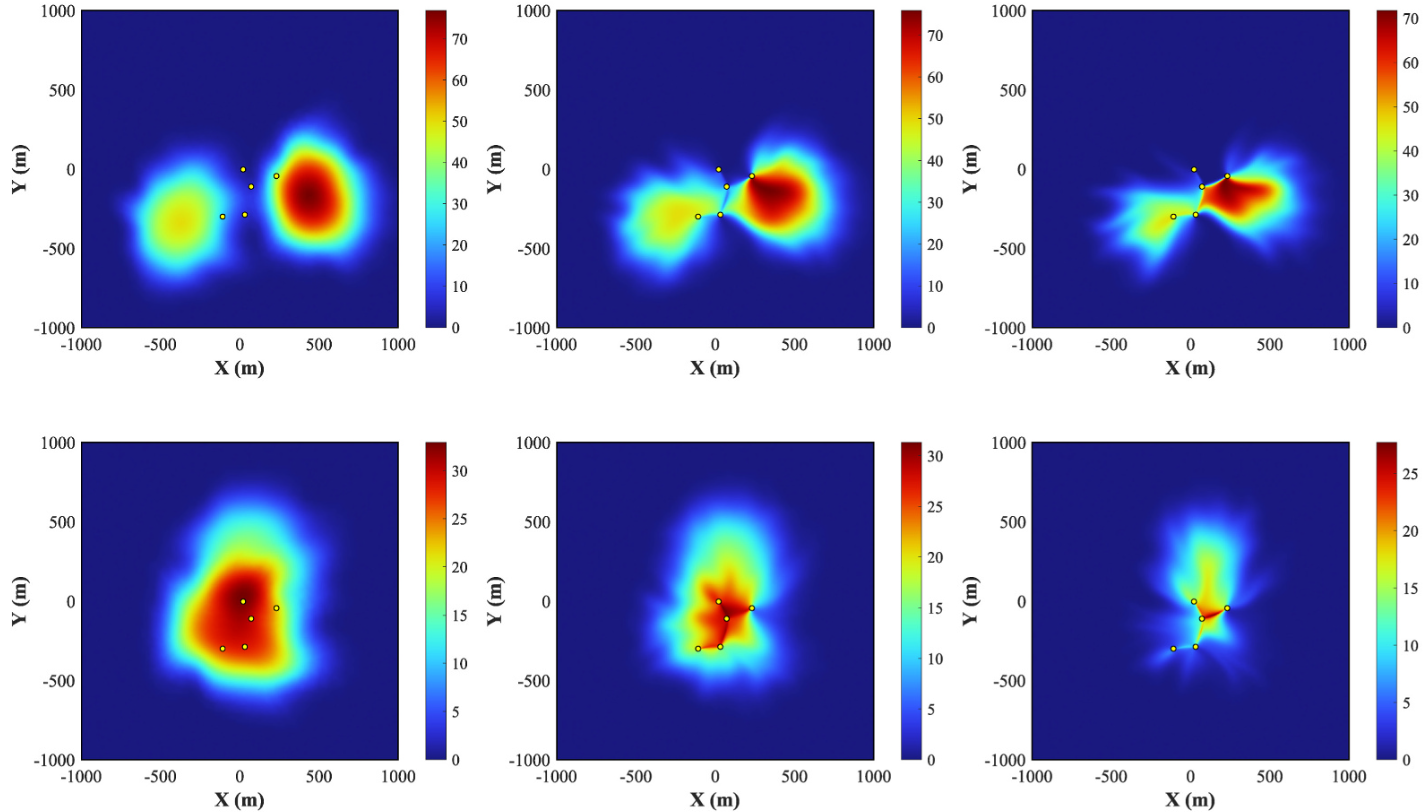

- [JCH 2026] A Multiple Surrogate Simulation-Optimization Framework for Designing Pump-and-Treat Systems. [paper] [bib]

Chaoqi Wang, Zhi Dou#, Ning Chen, Yan Zhu, Zhihan Zou, Jian Song, and Shen-Huan Lyu# (# indicates correspondence.)

Journal of Contaminant Hydrology, 227:104876, 2026. (GSC T2, CAS Q3)

- [PRL 2026] Compressing Model with Few Class-Imbalance Samples: An Out-of-Distribution Expedition. [paper] [bib]

Tian-Shuang Wu, Shen-Huan Lyu#, Yanyan Wang#, Ning Chen, Zhihao Qu, and Baoliu Ye. (# indicates correspondence.)

Pattern Recognition Letters, 201:117-124, 2026. (CCF C, CAS Q3)

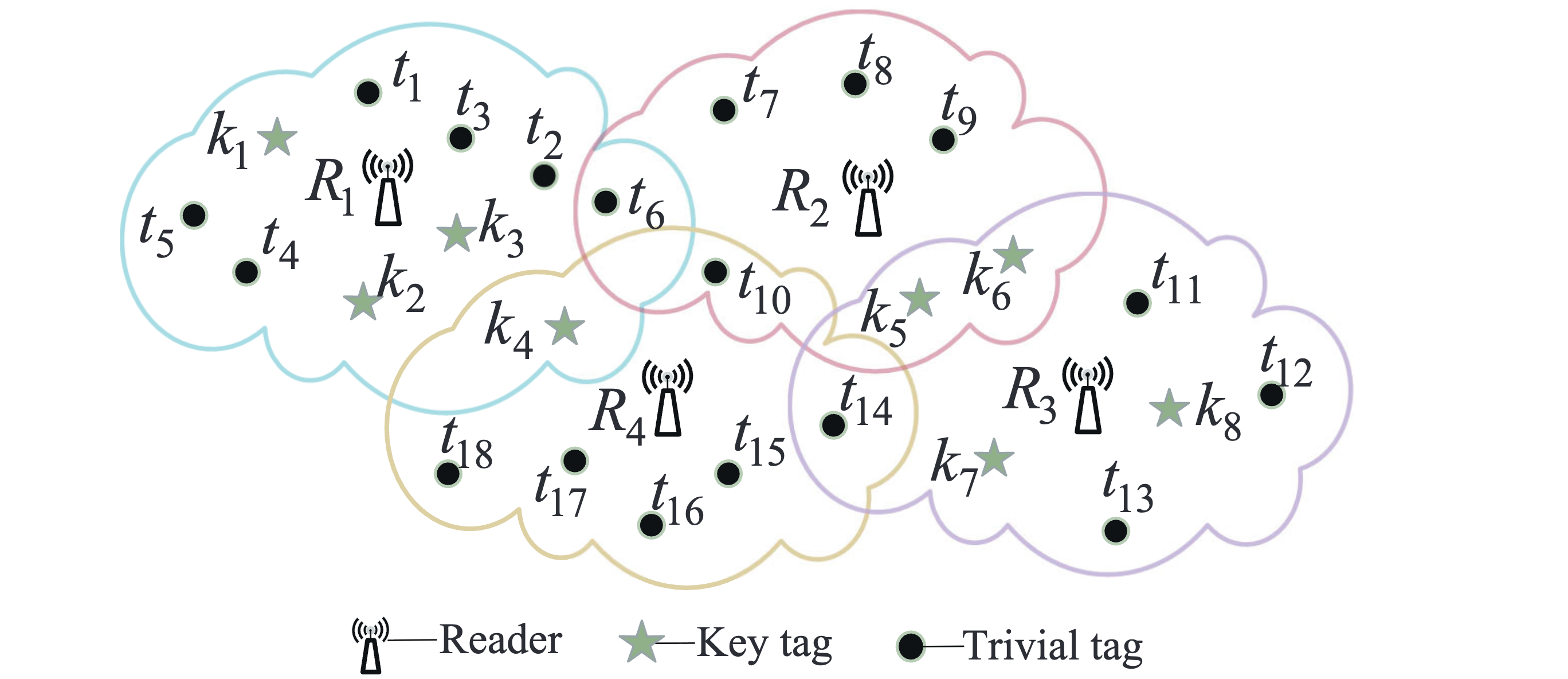

- [TMC 2026] Time-Efficient Identifying Key Tag Distribution in Large-Scale RFID Systems. [paper] [bib]

Yanyan Wang, Jia Liu, Zhihao Qu, Shen-Huan Lyu, Bin Tang, and Baoliu Ye.

IEEE Transactions on Mobile Computing, 25(2):2725-2742, 2026. (CCF A, CAS Q1)

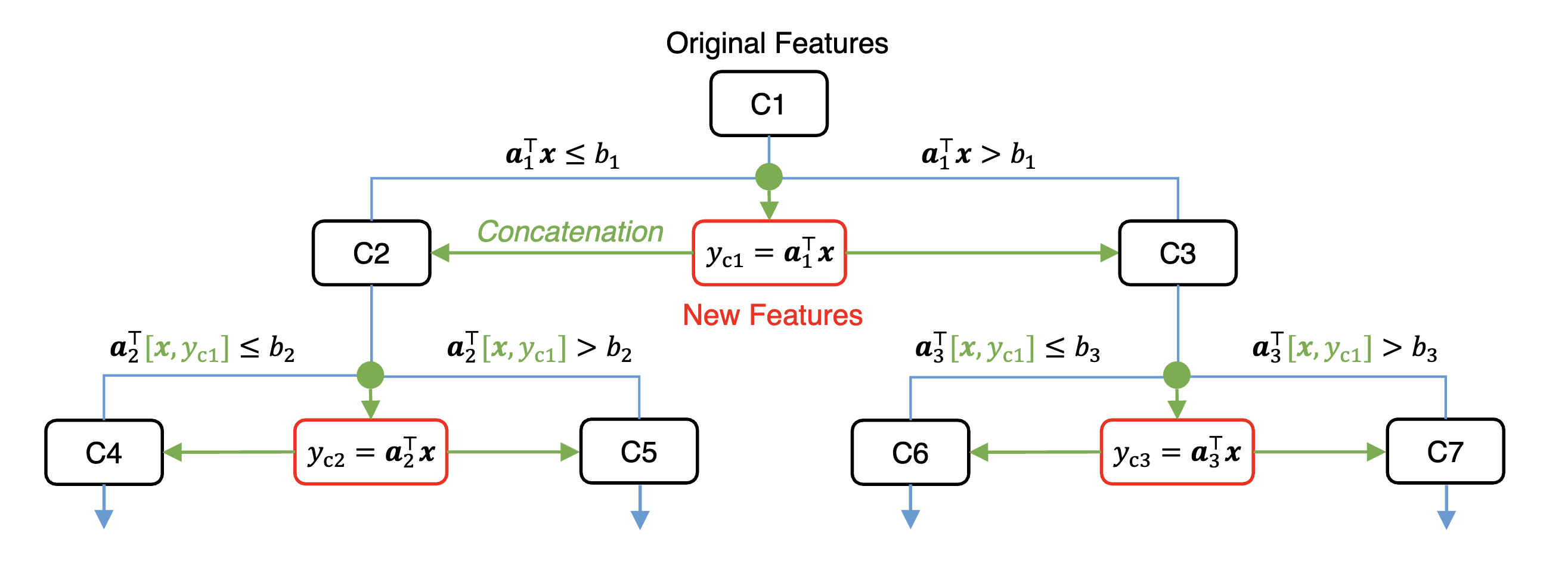

- [INS 2025] Enhance Learning Efficiency of Oblique Decision Tree via Feature Concatenation. [paper] [bib]

Shen-Huan Lyu, Yi-Xiao He, Yanyan Wang, Zhihao Qu, Bin Tang, and Baoliu Ye.

Information Sciences, 721:122613, 2025. (CCF B, CAS Q1)

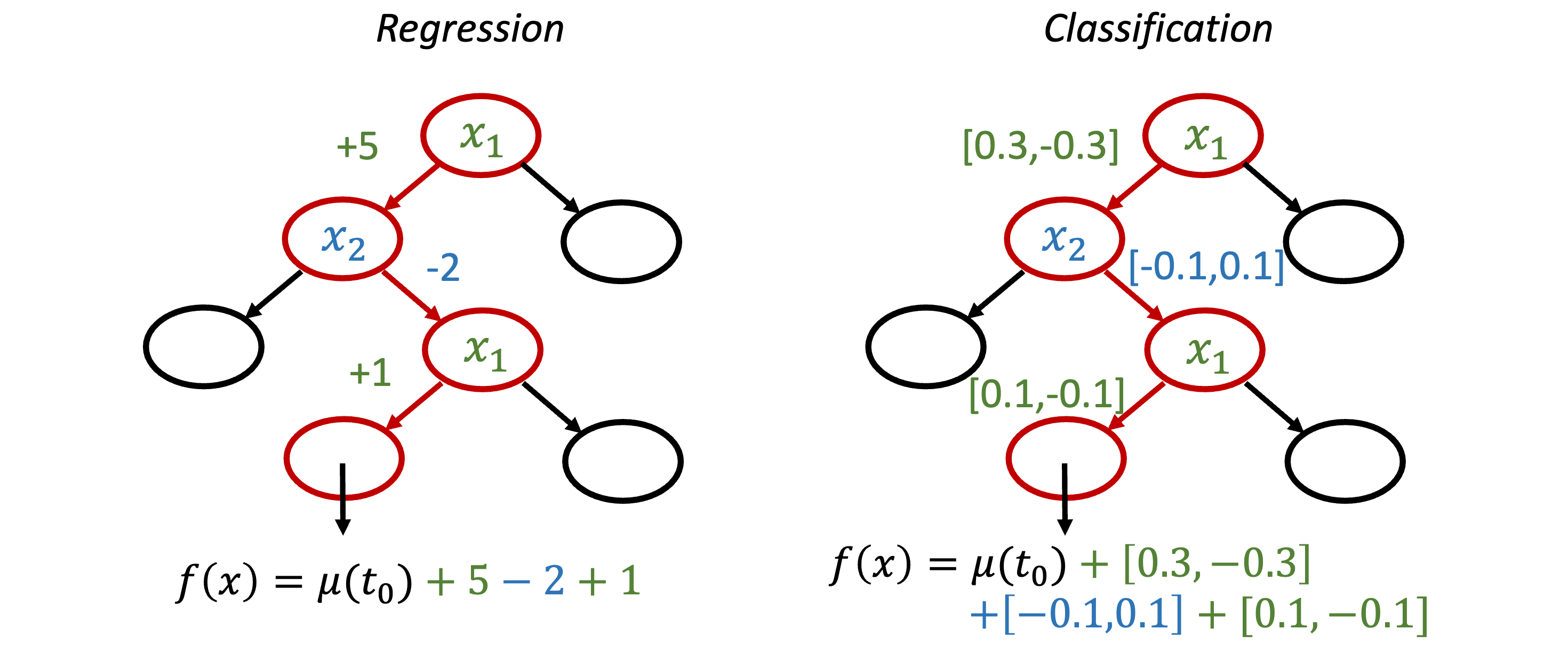

- [TKDD 2025] Interpreting Deep Forest through Feature Contribution and MDI Feature Importance. [paper] [code] [bib]

Yi-Xiao He, Shen-Huan Lyu, and Yuan Jiang.

ACM Transactions on Knowledge Discovery from Data, 20(1), 15:1-21, 2025. (CCF B, CAS Q3)

-

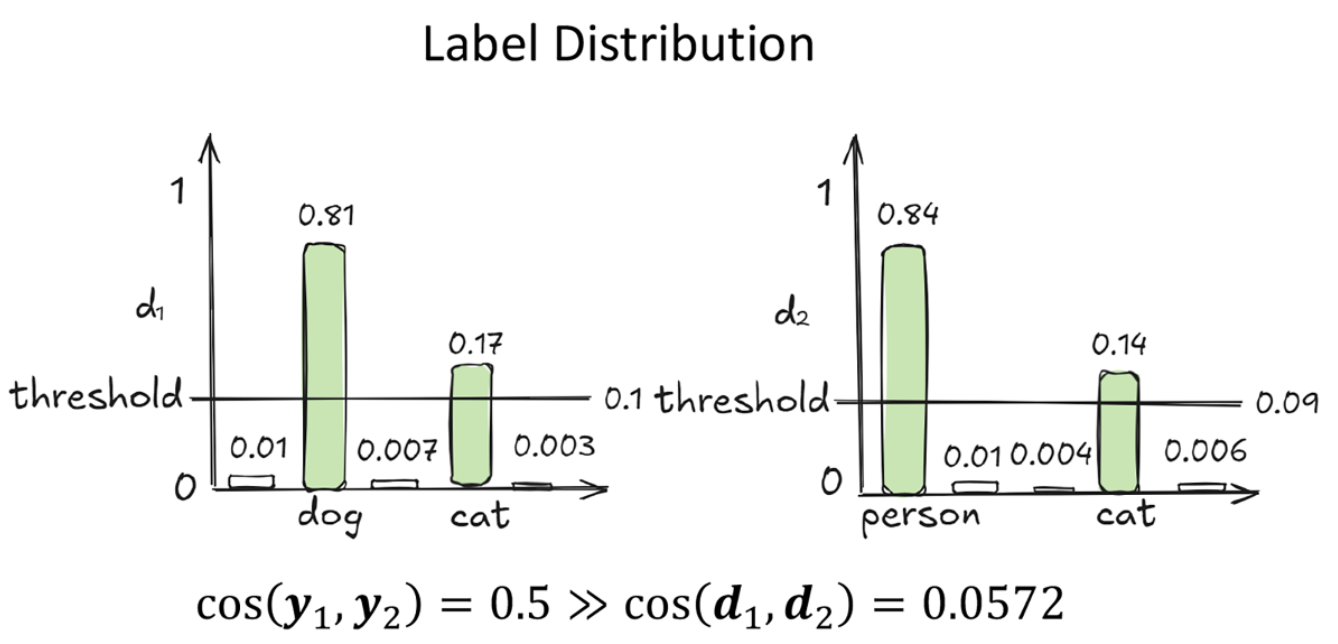

[IPPR 2025] Learning Semantic Boundaries: An Adaptive Structural Loss for Multi-Label Contrastive Learning. [paper] [bib]

Ning Chen, Shen-Huan Lyu#, Yanyan Wang#, and Bin Tang. (# indicates correspondence.)

In: Proceedings of the 2nd IEEE International Conference on Intelligent Perception and Pattern Recognition, pp. 204-210, Chongqing, China, 2025. -

[IWQoS 2025] Multi-Range Query in Commodity RFID Systems. [paper] [bib]

Yanyan Wang, Jia Liu, Zhihao Qu#, Shen-Huan Lyu#, Bin Tang, and Baoliu Ye. (# indicates correspondence.)

In: Proceedings of the 33rd IEEE/ACM International Symposium on Quality of Service, pp. 1-10, Gold Coast, Australia, 2025. (CCF B)

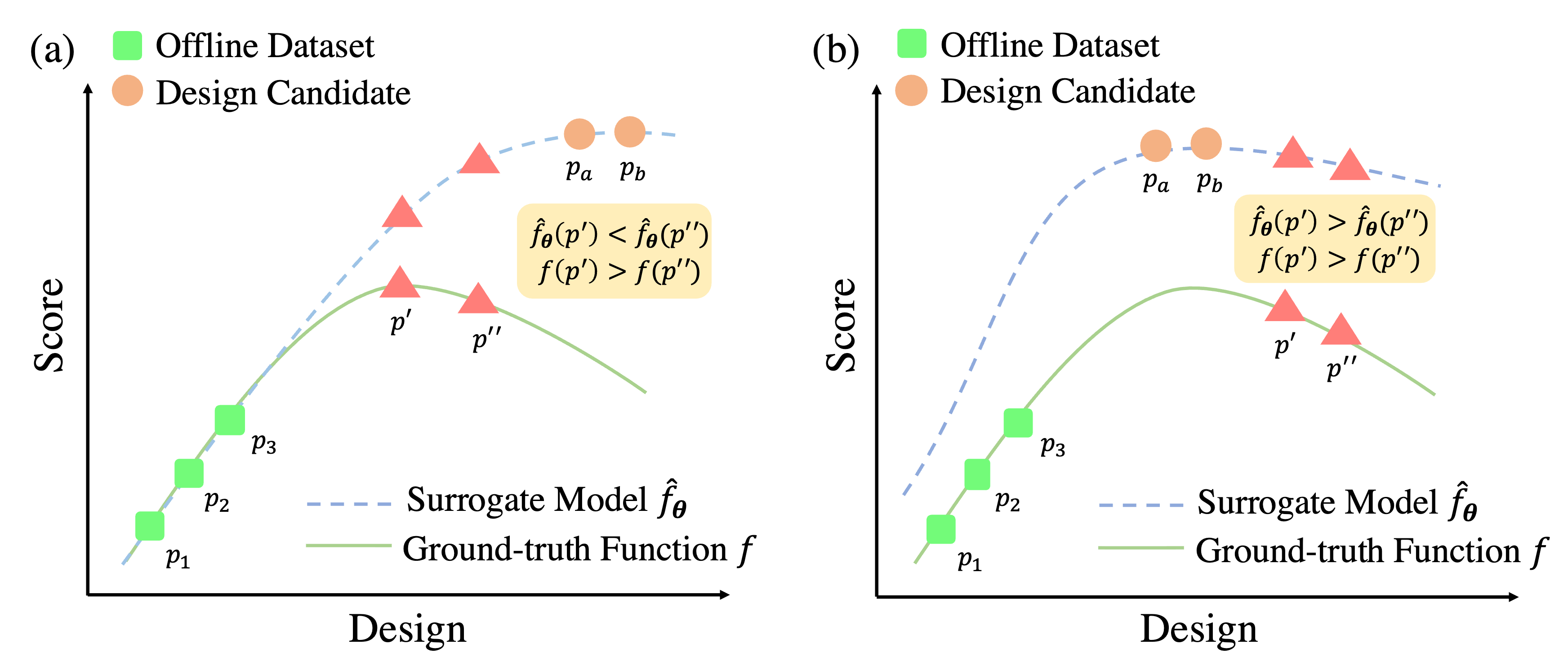

- [ICLR 2025] Offline Model-Based Optimization by Learning to Rank. [paper] [bib]

Rong-Xi Tan, Ke Xue, Shen-Huan Lyu, Haopu Shang, Yao Wang, Yaoyuan Wang, Sheng Fu, and Chao Qian

In: Proceedings of the 13th International Conference on Learning Representations, pp. 1-17, Singapore, 2025.

- [PRICAI 2024] Personalized Federated Learning with Feature Alignment via Knowledge Distillation. [paper] [bib]

Guangfei Qi, Zhihao Qu, Shen-Huan Lyu, Ninghui Jia, and Baoliu Ye.

In: Proceedings of the 21st Pacific Rim International Conference on Artificial Intelligence, pp. 121-133, Kyoto, Japan, 2024. (CCF C)

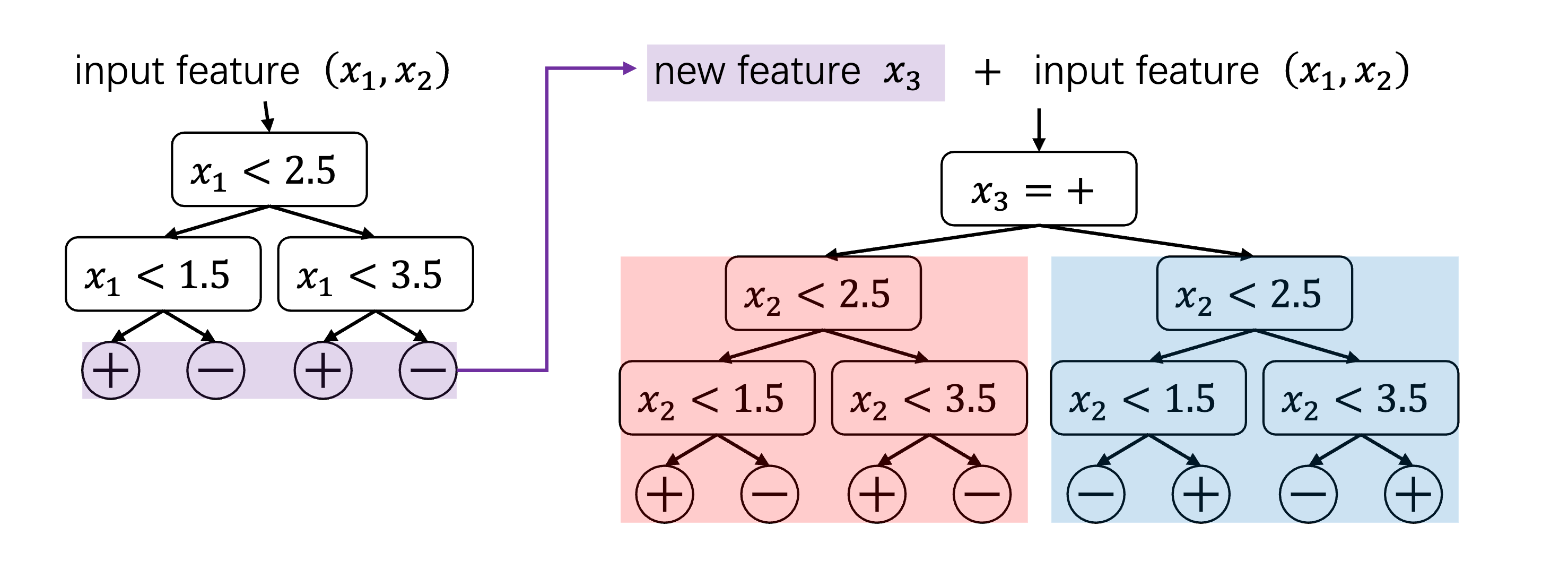

- [ECAI 2024] The Role of Depth, Width, and Tree Size in Expressiveness of Deep Forest. [paper] [code] [bib]

Shen-Huan Lyu*, Jin-Hui Wu*, Qin-Cheng Zheng, and Baoliu Ye. (* indicates equal contribution)

In: Proceedings of the 27th European Conference on Artificial Intelligence, pp. 2042-2049, Santiago de Compostela, Spain, 2024. (CCF B)

- [ECAI 2024] Mask-Encoded Sparsification: Overcoming Biased Gradients for Communication-Efficient Split Learning. [paper] [bib]

Wenxuan Zhou, Zhihao Qu, Shen-Huan Lyu, Miao Cai, and Baoliu Ye.

In: Proceedings of the 27th European Conference on Artificial Intelligence, pp. 2806-2813, Santiago de Compostela, Spain, 2024. (CCF B)

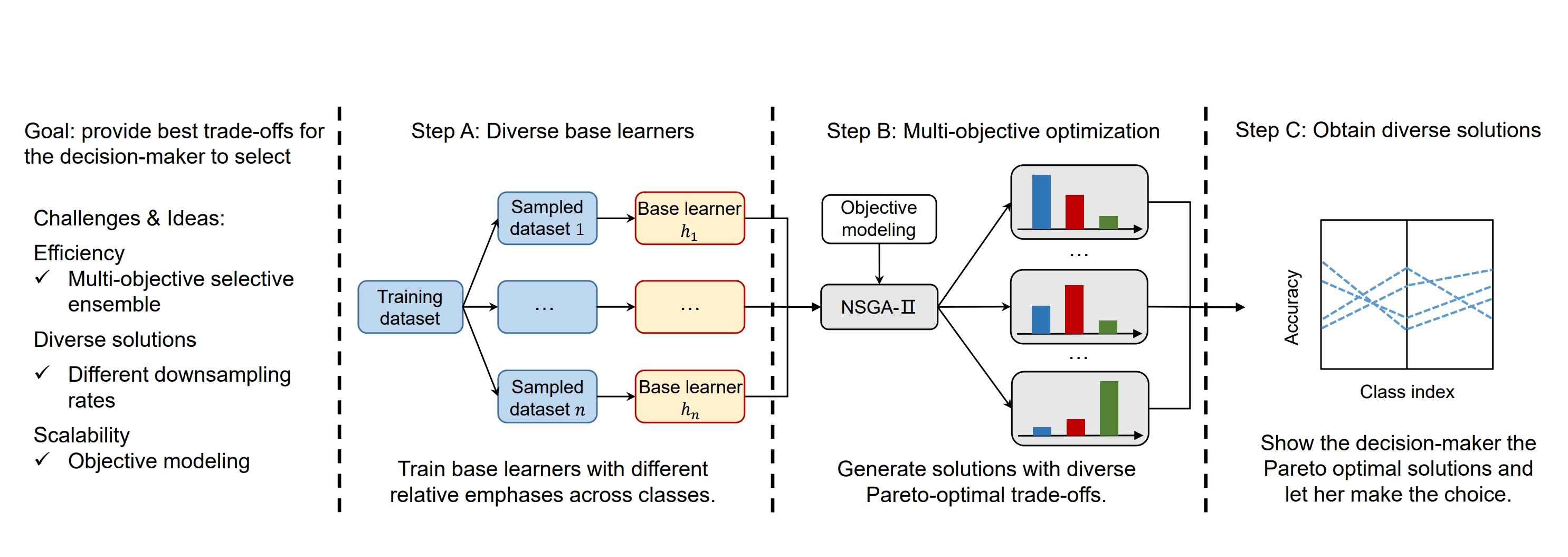

- [INS 2024] Multi-Class Imbalance Problem: A Multi-Objective Solution. [paper] [code] [bib]

Yi-Xiao He, Dan-Xuan Liu, Shen-Huan Lyu, Chao Qian, and Zhi-Hua Zhou.

Information Sciences, 680:121156, 2024. (CCF B, CAS Q1)

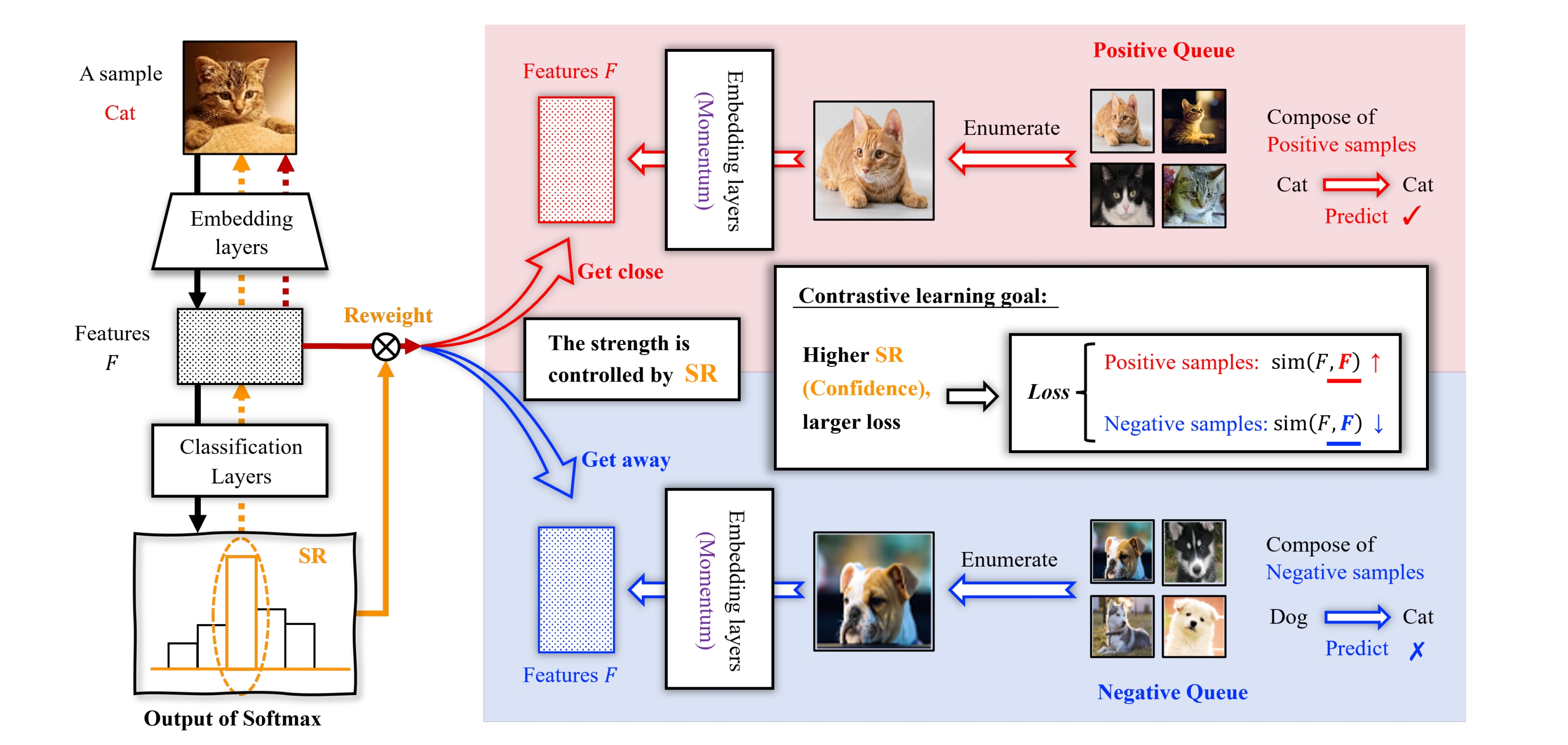

- [ICML 2024] Confidence-Aware Contrastive Learning for Selective Classification. [paper] [code] [bib]

Yu-Chang Wu, Shen-Huan Lyu, Haopu Shang, Xiangyu Wang, and Chao Qian.

In: Proceedings of the 41st International Conference on Machine Learning, pp. 53706-53729, Vienna, Austria, 2024. (CCF A)

-

[IWQoS 2024] Identifying Key Tag Distribution in Large-Scale RFID Systems. [paper] [bib]

Yanyan Wang, Jia Liu, Shen-Huan Lyu, Zhihao Qu, Bin Tang, and Baoliu Ye.

In: Proceedings of the 32nd IEEE/ACM International Symposium on Quality of Service, pp. 1-10, Guangzhou, China, 2024. (CCF B) -

[JOS 2024] Interaction Representations Based Deep Forest Method in Multi-Label Learning. [paper] [bib]

Shen-Huan Lyu, Yi-He Chen, and Yuan Jiang.

Journal of Software, 35(4):1934-1944, 2024. (CCF A in Chinese) -

[AISTATS 2023] On the Consistency Rate of Decision Tree Learning Algorithms. [paper] [code] [bib]

Qin-Cheng Zheng, Shen-Huan Lyu, Shao-Qun Zhang, Yuan Jiang, and Zhi-Hua Zhou.

In: Proceedings of the 26th International Conference on Artificial Intelligence and Statistics, pp. 7824-7848, Valencia, ES, 2023. (CCF C) -

[NeurIPS 2022 Oral] Depth is More Powerful than Width with Prediction Concatenation in Deep Forests. [paper] [bib]

Shen-Huan Lyu, Yi-Xiao He, and Zhi-Hua Zhou.

In: Advances in Neural Information Processing Systems 35, pp. 29719-29732, New Orleans, Louisiana, US, 2022. (CCF A)

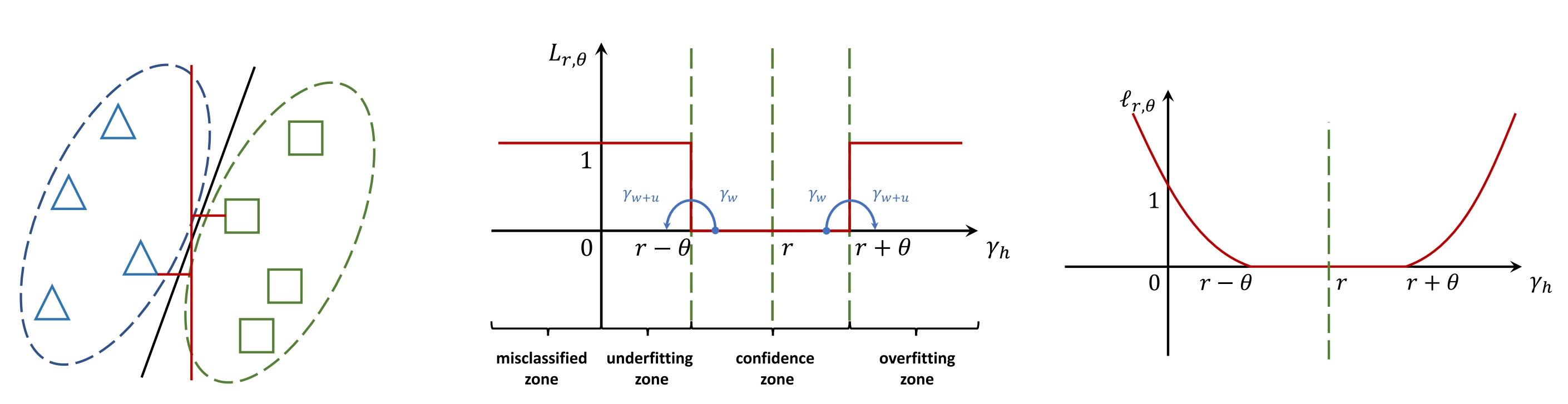

- [NEUNET 2022] Improving Generalization of Neural Networks by Leveraging Margin Distribution. [paper] [code] [bib]

Shen-Huan Lyu, Lu Wang, and Zhi-Hua Zhou.

Neural Networks, 151:48-60, 2022. (CCF B, CAS Q1)

-

[CJE 2022] A Region-Based Analysis for the Feature Concatenation in Deep Forests. [paper] [bib]

Shen-Huan Lyu, Yi-He Chen, and Zhi-Hua Zhou.

Chinese Journal of Electronics, 31(6):1072-1080, 2022. (CCF A in Chinese, CAS Q4) -

[ICDM 2021] Improving Deep Forest by Exploiting High-Order Interactions. [paper] [code] [bib]

Yi-He Chen*, Shen-Huan Lyu*, and Yuan Jiang. (* indicates equal contribution)

In: Proceedings of the 21st IEEE International Conference on Data Mining, pp. 1030-1035, Auckland, NZ, 2021. (CCF B) -

[NeurIPS 2019] A Refined Margin Distribution Analysis for Forest Representation Learning. [paper] [code] [bib]

Shen-Huan Lyu, Liang Yang, and Zhi-Hua Zhou.

In: Advances in Neural Information Processing Systems 32, pp. 5531-5541, Vancouver, British Columbia, CA, 2019. (CCF A)

🎖 Honors and Awards

- 2025.02 The Hong Kong Scholars Program (中国博士后科学基金委“香江学者”计划), China.

- 2024.07 Jiangsu Youth Science and Technology Talent Sponsorship Program (江苏省科协青年科技人才托举工程), Jiangsu.

- 2023.12 Excellent Doctoral Dissertation of Jiangsu Artificial Intelligence Society (江苏省人工智能学会优博), Jiangsu.

- 2023.06 The 5th Special Funding from China Postdoctoral Science Foundation (中国博士后科学基金委特别资助项目), China.

- 2022.12 Jiangsu Excellent Postdoctoral Program (江苏省卓越博士后计划), Jiangsu.

- 2019.10 Nanjing Artificial Intelligence Industry Talent Program (南京市⼈⼯智能产业⼈才兴智计划), Nanjing.

- 2017.09 Presidential Special Scholarship for Ph.D. Student in Nanjing University (南京大学博士研究生校长特别奖学金), Nanjing.

✨ Academic Service

Senior Program Committee Member of Conferences:

- IJCAI: 2021

Program Committee Member of Conferences:

- ICML: 2021-2026

- NeurIPS: 2020-2025

- AAAI: 2020, 2021, 2023-2026

- IJCAI: 2020, 2022-2026

- ICLR: 2020-2025

- AISTATS: 2023-2025

Reviewer for Journals:

- Science China Information Sciences (SCIS)

- Journal of Machine Learning Research (JMLR)

- Artificial Intelligence (AIJ)

- IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

- IEEE Transactions on Knowledge and Data Engineering (TKDE)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- ACM Transactions on Knowledge Discovery from Data (TKDD)

- Machine Learning (MLJ)

- Research

- Chinese Journal of Electronics (CJE)

- 软件学报 (Journal of Software, JOS)

📖 Educations

- 2017.09 - 2022.12, Ph.D. in Computer Science, Nanjing University (NJU)

- 2013.09 - 2017.06, B.Sc. in Statistics, University of Science and Technology of China (USTC)

💬 Invited Talks

- 2023.11, Deep Forest, Z-Park National Laboratory, Beijing.

- 2022.12, Depth is More Powerful than Width, New Orleans Convention Center, Online.

- 2022.01, Margin Distribution Neural Networks, Huawei Noah’s Ark Lab, Online.

💻 Experiences

- 2025.02 - now, Hong Kong Scholar in City University of Hong Kong, Hong Kong.

- 2022.12 - now, Assistent Researcher in Hohai University, Nanjing.

- 2022.06 - 2022.08, Machine Learning Engineer in Huawei Noah’s Ark Lab, Nanjing.